Project Overview

Effectively and securely managing infrastructure is essential for modern DevOps workflows. This guide will create an End-to-End Infrastructure-as-Code (IaC) pipeline to provision an AWS EKS cluster using Terraform. We will automate the pipeline with Jenkins and securely manage sensitive credentials using HashiCorp Vault.

High-Level Architecture

- Jenkins Server:

- Deployed on an EC2 instance to manage CI/CD pipelines.

- Jenkins plugins installed: Terraform, Pipeline, AWS Credentials, and HashiCorp Vault.

2. Vault Server:

- Configured on a separate server to securely store and retrieve secrets (AWS and GitHub credentials).

- Secrets stored:

- aws/terraform-project: Contains aws_access_key_id and aws_secret_access_key.

- secret/github: Contains GitHub PAT (pat).

3. Terraform Configurations:

- Infrastructure as Code (IaC) to provision:

- VPC, Subnets

- EKS Cluster

- Worker Nodes

4. CI/CD Pipeline:

- Jenkins pipeline retrieves secrets from Vault and runs Terraform stages for EKS provisioning.

Prerequisites

- AWS Account:

- Ensure IAM permissions:

- AmazonEKSFullAccess

- AmazonEC2FullAccess

- IAMFullAccess

2. EC2 Server for Jenkins:

- Jenkins installed and configured with required plugins.

- Vault credentials (vault-role-id, vault-secret-id, VAULT_URL) configured in Jenkins Credentials Store.

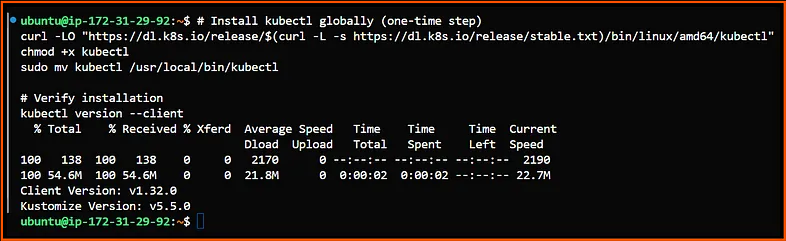

- Preinstall kubectl on the Jenkins Server:

3. HashiCorp Vault Server:

- Vault configured with secrets:

- aws/terraform-project → AWS credentials.

- secret/github → GitHub PAT.

- AppRole authentication configured (vault-role-id and vault-secret-id).

Best Practices:

3.1 Automate Credential Renewal:

- Since secret_id expires (the default TTL is 24 hours), consider automating its regeneration and updating Jenkins credentials programmatically.

- Use Vault CLI or API scripts to refresh credentials periodically.

3.2 Secure the Jenkins Secrets:

- Ensure Jenkins credentials are properly encrypted and masked.

- Use Jenkins Secret Text credentials to avoid plain-text exposure in pipeline logs.

3.3 Test Before Deploying:

- Always validate the new role_id and secret_id using the Vault CLI before updating Jenkins.

Please refer to the link below for complete information:

4. Terraform Configuration Key Points

Refer to my GitHub repository: aws-eks-terraform.

https://github.com/SubbuTechOps/aws-eks-terraform.git

Brief Explanation of Terraform Files in this Repository:

- .terraform/modules:

- A directory for storing downloaded Terraform modules helps ensure the code is reusable and modular.

2. .gitignore:

- It excludes unnecessary files such as .terraform directories, state files, and local caches from version control.

3. .terraform.lock.hcl:

- It tracks Terraform provider versions to ensure consistency and prevent unexpected updates during deployments.

4. Jenkinsfile:

- This file is external to Terraform but used to trigger the Terraform execution workflow.

Terraform executes files in a logical dependency order rather than a strict sequential order:

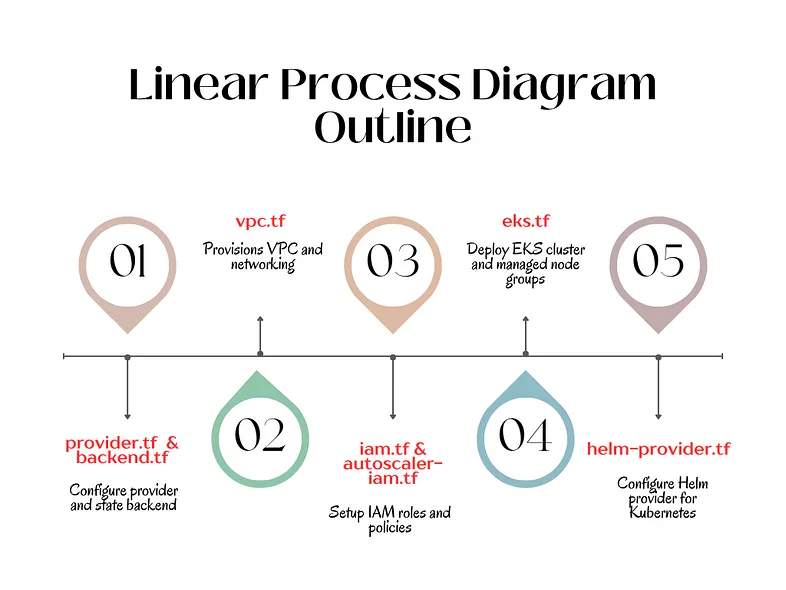

1. Provider and Backend Configuration:

Files Involved: provider.tf, backend.tf

Purpose:

- Configures the AWS provider to interact with AWS services.

- Sets up remote backend (e.g., S3) for storing Terraform state.

Execution:

terraform init

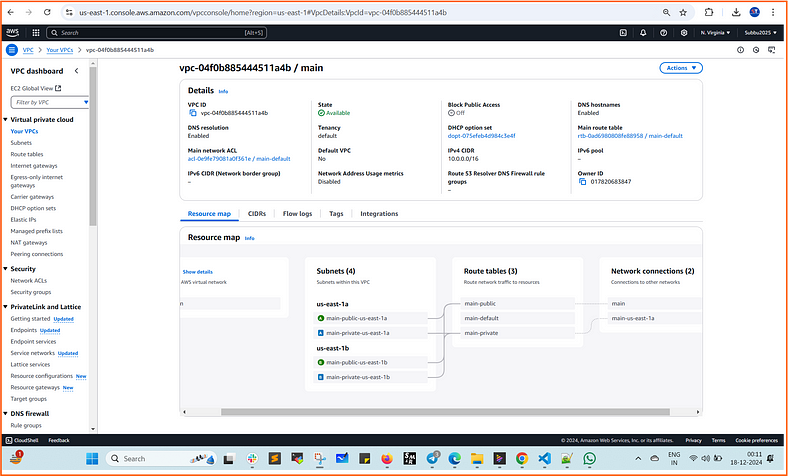

2. VPC Creation:

Files Involved: vpc.tf

Purpose:

- Creates the VPC, subnets (public and private), route tables, and NAT gateways.

- This provides networking infrastructure to host the EKS cluster.

3. IAM Role and Policy Setup:

Files Involved: iam.tf, autoscaler-iam.tf

Purpose:

- Sets up IAM roles and policies for:

- EKS worker nodes.

- Cluster Autoscaler.

- Load Balancer Controller.

Why Now?: IAM roles must be available for EKS components and add-ons to operate securely.

4. EKS Cluster Provisioning:

Files Involved: eks.tf

Purpose:

- Provisions the EKS control plane and managed node groups (On-Demand and Spot instances).

- Integrates IAM roles for worker nodes and enables IRSA (IAM Roles for Service Accounts).

Dependencies:

- Relies on the VPC (from vpc.tf).

- Requires IAM roles (from iam.tf).

5. Helm Provider Setup:

Files Involved: helm-provider.tf

Purpose:

- Configures the Helm provider to deploy Helm charts for Kubernetes add-ons.

Why Now?: Helm provider enables deploying add-ons like AWS Load Balancer Controller.

6. Deploy the AWS Load Balancer Controller:

Files Involved: helm-load-balancer-controller.tf, values.yaml

Purpose:

- Deploys the AWS Load Balancer Controller using Helm.

- Allows integration with AWS ALB/NLB for Kubernetes services.

Dependencies:

- It relies on the Helm provider being configured (helm-provider.tf).

7. Cluster Autoscaler Deployment:

Files Involved: autoscaler-iam.tf, autoscaler-manifest.tf

Purpose:

- Deploys the Cluster Autoscaler Kubernetes manifest.

- Configures IAM roles for scaling node groups dynamically.

Dependencies:

- It requires the EKS cluster and node groups to be up (eks.tf).

- IAM roles are already set up (autoscaler-iam.tf).

8. EBS CSI Driver Setup:

Files Involved: ebs_csi_driver.tf

Purpose:

- Installs the EBS CSI Driver to manage persistent storage (EBS volumes) for stateful workloads.

Dependencies:

- EKS cluster must be running (eks.tf).

9. Monitoring Setup:

Files Involved: monitoring.tf

Purpose:

- Sets up monitoring tools like CloudWatch or Prometheus to monitor EKS cluster health and performance.

Dependencies:

- It relies on a running EKS cluster (eks.tf).

10. Add-ons Configuration:

Files Involved: addons.json

Purpose:

- Optional configuration file to enhance EKS functionality with add-ons like monitoring, logging, or ingress controllers.

Dependencies:

- It requires Helm and EKS cluster to be functional.

Linear Process Diagram Outline for this execution:

CI/CD Pipeline Execution Workflow

Jenkins Pipeline Script Execution

Jenkins fetches credentials securely from HashiCorp Vault:

- Vault AppRole authentication retrieves AWS and GitHub tokens.

- Secrets are exported to environment variables (vault_env.sh).

Pipeline Stages:

- Fetch Credentials from Vault

- Checkout Source Code

- Install Terraform

- Terraform Init: Initializes Terraform backend.

- Terraform Plan and Apply: Provisions the VPC, subnets, and EKS cluster.

- Update Kubeconfig and Verify:

- Dynamically retrieves EKS cluster details using AWS CLI.

- Updates kubeconfig for kubectl.

7. Prompt for Terraform Destroy: Ensures controlled teardown.

8. Terraform Destroy (Optional): Destroys infrastructure after confirmation.

Now we will examine how the practical execution is carried out:

Jenkinsfile:

pipeline {

agent any

environment {

VAULT_URL = '' // Vault server URL

}

stages {

stage("Fetch Credentials from Vault") {

steps {

script {

withCredentials([

string(credentialsId: 'VAULT_URL', variable: 'VAULT_URL'),

string(credentialsId: 'vault-role-id', variable: 'VAULT_ROLE_ID'),

string(credentialsId: 'vault-secret-id', variable: 'VAULT_SECRET_ID')

]) {

echo "Fetching GitHub and AWS credentials from Vault..."

// Fetch secrets with error handling

sh '''

# Set Vault server URL

export VAULT_ADDR="${VAULT_URL}"

# Log into Vault using AppRole

echo "Logging into Vault..."

VAULT_TOKEN=$(vault write -field=token auth/approle/login role_id=${VAULT_ROLE_ID} secret_id=${VAULT_SECRET_ID} || { echo "Vault login failed"; exit 1; })

export VAULT_TOKEN=$VAULT_TOKEN

# Fetch GitHub token

echo "Fetching GitHub Token..."

GIT_TOKEN=$(vault kv get -field=pat secret/github || { echo "Failed to fetch GitHub token"; exit 1; })

# Fetch AWS credentials

echo "Fetching AWS Credentials..."

AWS_ACCESS_KEY_ID=$(vault kv get -field=aws_access_key_id aws/terraform-project || { echo "Failed to fetch AWS Access Key ID"; exit 1; })

AWS_SECRET_ACCESS_KEY=$(vault kv get -field=aws_secret_access_key aws/terraform-project || { echo "Failed to fetch AWS Secret Access Key"; exit 1; })

# Export credentials to environment variables

echo "Exporting credentials to environment..."

echo "export GIT_TOKEN=${GIT_TOKEN}" >> vault_env.sh

echo "export AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID}" >> vault_env.sh

echo "export AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY}" >> vault_env.sh

'''

// Load credentials into environment

sh '''

echo "Loading credentials into environment..."

. ${WORKSPACE}/vault_env.sh

echo "Credentials loaded successfully."

'''

}

}

}

}

stage("Checkout Source Code") {

steps {

script {

echo "Checking out source code from GitHub..."

sh '''

git clone @github.com/SubbuTechOps/aws-eks-terraform.git">@github.com/SubbuTechOps/aws-eks-terraform.git">https://${GIT_TOKEN}@github.com/SubbuTechOps/aws-eks-terraform.git

cd aws-eks-terraform

'''

}

}

}

stage("Install Terraform") {

steps {

echo "Installing Terraform..."

sh '''

wget -q -O terraform.zip https://releases.hashicorp.com/terraform/1.3.4/terraform_1.3.4_linux_amd64.zip

unzip -o terraform.zip

rm -f terraform.zip

chmod +x terraform

./terraform --version

'''

}

}

stage("Terraform Init") {

steps {

echo "Initializing Terraform..."

sh '''

export AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID

export AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY

cd aws-eks-terraform

. ${WORKSPACE}/vault_env.sh

# Debugging to verify credentials

echo "AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID}"

echo "AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY}"

../terraform init

'''

}

}

stage("Terraform Plan and Apply") {

steps {

echo "Running Terraform Plan and Apply..."

sh '''

# Load AWS credentials

. ${WORKSPACE}/vault_env.sh

# Verify credentials

echo "AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID}"

echo "AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY}"

# Run Terraform

cd aws-eks-terraform

../terraform plan -out=tfplan

echo "Running Terraform Apply..."

../terraform apply -auto-approve tfplan

echo "Terraform Apply completed successfully."

'''

}

}

stage("Update Kubeconfig and Verify") {

steps {

echo "Updating kubeconfig using AWS credentials from Vault..."

sh '''

# Step 1: Load AWS credentials

echo "Loading AWS credentials..."

. ${WORKSPACE}/vault_env.sh

# Step 2: Verify AWS credentials

aws sts get-caller-identity || { echo "Invalid AWS credentials"; exit 1; }

# Step 3: Retrieve EKS cluster name

echo "Retrieving EKS cluster name..."

CLUSTER_NAME=$(aws eks list-clusters --region us-east-1 --query 'clusters[0]' --output text)

if [ -z "$CLUSTER_NAME" ]; then

echo "No EKS cluster found. Exiting..."

exit 1

fi

echo "EKS Cluster Name: $CLUSTER_NAME"

# Step 4: Update Kubeconfig in Jenkins home directory

echo "Updating kubeconfig..."

KUBE_CONFIG_PATH="/var/lib/jenkins/.kube/config"

mkdir -p /var/lib/jenkins/.kube

aws eks update-kubeconfig --name $CLUSTER_NAME --region us-east-1 --kubeconfig $KUBE_CONFIG_PATH

# Step 5: Set permissions for Jenkins user

chown jenkins:jenkins $KUBE_CONFIG_PATH

chmod 600 $KUBE_CONFIG_PATH

# Step 6: Verify Kubernetes connectivity

export KUBECONFIG=$KUBE_CONFIG_PATH

echo "Verifying Kubernetes connectivity..."

kubectl get nodes

'''

}

}

stage("Prompt for Terraform Destroy") {

steps {

script {

def userInput = input(

id: 'ConfirmDestroy',

message: 'Do you want to destroy the infrastructure?',

parameters: [

choice(name: 'PROCEED', choices: ['Yes', 'No'], description: 'Select Yes to destroy or No to skip.')

]

)

if (userInput == 'Yes') {

echo "User confirmed to proceed with destroy."

env.PROCEED_DESTROY = "true"

} else {

echo "User chose not to destroy. Skipping Terraform Destroy stage."

env.PROCEED_DESTROY = "false"

currentBuild.result = 'SUCCESS' // Explicitly mark the build as successful

return // Gracefully exit the stage

}

}

}

}

stage("Terraform Destroy") {

when {

expression { return env.PROCEED_DESTROY == "true" }

}

steps {

echo "Running Terraform Destroy..."

sh '''

# Load AWS credentials

. ${WORKSPACE}/vault_env.sh

# Run Terraform destroy

cd aws-eks-terraform

../terraform destroy -auto-approve

'''

}

}

}

post {

success {

echo "Pipeline executed successfully!"

}

failure {

echo "Pipeline failed. Check logs for details."

}

always {

cleanWs()

echo "Workspace cleaned successfully."

}

}

}

Post-Execution Verification

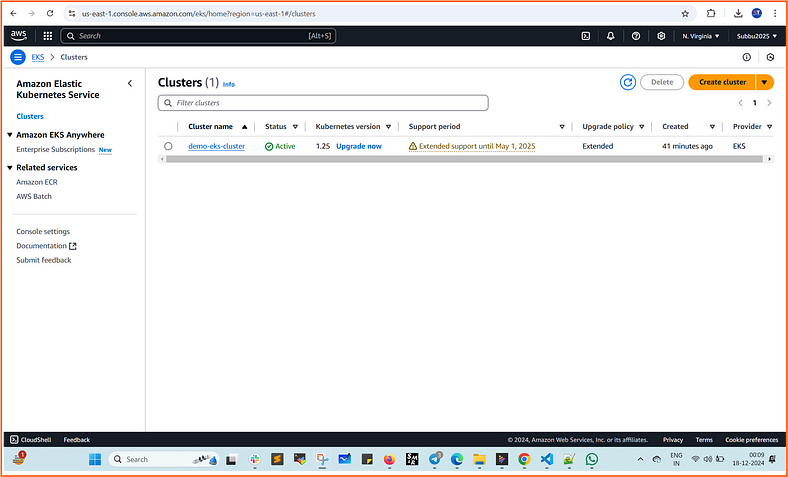

1. AWS Console:

- Navigate to EKS > Verify the cluster and node group creation.

Stage-1: Fetch Credentials from Vault:

Stage-2: Checkout Source Code:

Stage-3: Install Terraform:

Stage-4: Terraform Init:

Satge-5: Terraform Plan and Apply:

Satge-6: Install kubectl and Update Kubeconfig:

Satge-7: Prompt for Terraform Destroy:

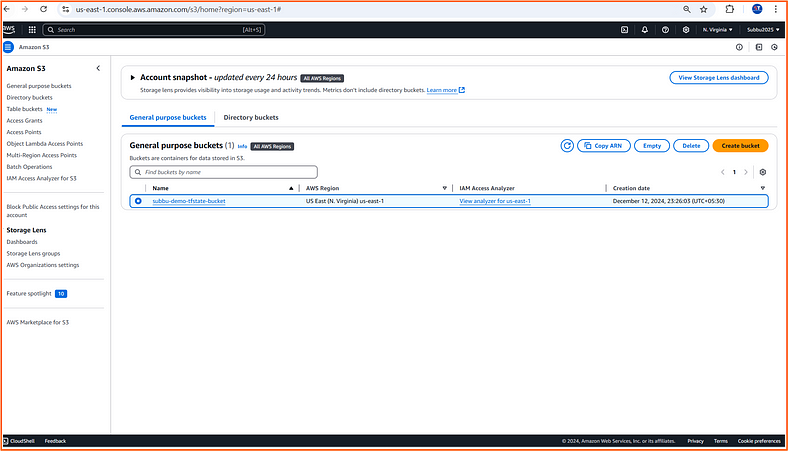

We can now check the resources in the AWS Console:

2. CLI Commands:

- Test Kubernetes connectivity:

Check current user:

whoami

Update kubeconfig for EKS cluster:

aws eks update-kubeconfig --name [cluster-name] --region [region] --kubeconfig ~/.kube/config

List the kubeconfig file with details:

ls -l ~/.kube/config

Set file permissions for kubeconfig:

chmod 600 ~/.kube/config

Add KUBECONFIG environment variable to .bashrc:

echo 'export KUBECONFIG=~/.kube/config' >> ~/.bashrc

Reload the .bashrc file:

source ~/.bashrc

Verify the KUBECONFIG variable:

echo $KUBECONFIG

Check Kubernetes nodes:

kubectl get nodes

List all pods across all namespaces:

kubectl get pods --all-namespaces

Note:

The Jenkins pipeline script dynamically updates the kubeconfig for the Jenkins user during pipeline execution, which allows access to the EKS cluster for CI/CD jobs. However, when a user manually logs into the Jenkins server (for example, the Ubuntu user), the kubeconfig must be configured explicitly. This is necessary because it is located in a different directory and is not shared between users.

Why This Happens?

- Jenkins Pipeline Context:

- The pipeline uses the /var/lib/jenkins/.kube/config file, specifically created for the Jenkins user.

- Kubernetes commands (kubectl) executed by Jenkins are scoped to Jenkins’ home directory (/var/lib/jenkins).

2. Manual Login Context:

- When you log in as Ubuntu, the system looks for the kubeconfig in the default location for the Ubuntu user, which is ~/.kube/config (i.e., /home/Ubuntu

- Since the pipeline kubeconfig is set for the Jenkins user, it is not automatically available for the ubuntu user.

3. Terraform State:

- Verify Terraform state is stored in the backend or locally.

Conclusion

Bringing together AWS EKS, Terraform, Jenkins, and HashiCorp Vault might seem overwhelming at first, but breaking it into clear steps makes the process smooth and achievable.

By automating infrastructure provisioning, streamlining CI/CD workflows, and managing secrets securely, you’ll create a robust, scalable, and secure Kubernetes environment.

This project not only enhances your DevOps skills but also demonstrates your ability to integrate modern tools effectively — a skill set essential for real-world production scenarios.

Start small, automate smart, and build confidence as you master the tools powering today’s cloud-native world! 🚀

Your Thoughts Matter!

I’d love to hear what you think about this article — feel free to share your opinions in the comments below (or above, depending on your device!). If you found this helpful or enjoyable, a clap, a comment, or even a highlight of your favorite sections would mean a lot.

For more insights into the world of technology and data, visit subbutechops.com. There’s plenty of exciting content waiting for you to explore!

Thank you for reading, and happy learning! 🚀